The history of computers traces back to ancient times‚ with the Abacus (3500 BC) as the first counting device‚ marking the beginning of computational tools․

From mechanical devices like Charles Babbage’s Analytical Engine to modern electronic computers‚ the evolution reflects human ingenuity and technological advancements‚ shaping society profoundly․

1․1․ Overview of Computer Evolution

The evolution of computers began with ancient tools like the Abacus (3500 BC)‚ marking the start of computational devices․ Over centuries‚ mechanical computers emerged‚ such as Charles Babbage’s Analytical Engine‚ laying the groundwork for modern computing․ The 20th century saw the transition to electronic computers‚ with ENIAC (1946) as the first large-scale electronic computer․ This era introduced programmable machines‚ revolutionizing data processing․ The development of the stored-program concept and the invention of the microchip further accelerated progress‚ enabling smaller‚ faster‚ and more powerful computers․ This timeline highlights humanity’s continuous quest for efficient calculation and information management‚ shaping modern technology․

1․2․ Importance of Studying Computer History

Studying computer history provides insights into the foundational inventions and innovations that shaped modern technology․ It reveals how early devices‚ like the Abacus and mechanical computers‚ laid the groundwork for digital systems․ Understanding the contributions of pioneers such as Charles Babbage and John Mauchly highlights the evolution of ideas and technologies․ This knowledge also underscores the societal impact of computing‚ from code-breaking during World War II to the rise of the internet․ By examining historical developments‚ we gain a deeper appreciation for how computers transformed industries‚ communication‚ and daily life‚ while inspiring future innovations in fields like artificial intelligence and quantum computing․

Ancient Computing Devices

The history of computing begins with ancient tools like the Abacus (3500 BC)‚ the first known counting device‚ and early mechanical systems that laid the groundwork for modern technology․

2․1․ The Abacus (3500 BC)

The Abacus‚ invented around 3500 BC‚ is considered one of the earliest computing devices․ It used beads on wires to perform basic arithmetic operations like addition and subtraction․

Its simplicity and effectiveness made it a foundational tool for commerce and trade across ancient civilizations‚ including Mesopotamia‚ Egypt‚ and China‚ lasting for millennia as a primary counting aid․

2․2․ Early Counting Systems

Early counting systems laid the groundwork for mathematical advancements․ Tally sticks‚ used around 35‚000 BC‚ marked counts by carving notches‚ serving as primitive record-keeping tools for resources and events․

Positional numeral systems emerged later‚ with civilizations like the Babylonians using a base-60 system and Egyptians employing a decimal system․ These innovations facilitated trade and complex record-keeping‚ forming the basis of modern mathematical systems․

Mechanical Computers

Mechanical computers emerged as a bridge between manual calculation and automation․ Charles Babbage’s Analytical Engine (1837) was the first mechanical computer designed for calculations and programmability․ Other devices followed‚ advancing computational capabilities through gears and levers‚ setting the stage for modern computing․

3․1․ Charles Babbage and the Analytical Engine

Charles Babbage‚ a British mathematician‚ is renowned for designing the Analytical Engine (1837)‚ the first mechanical computer capable of general-purpose calculation․ Although never built‚ it introduced programmability‚ with punched cards for input and a central processing unit․ Ada Lovelace‚ recognizing its potential‚ created the first algorithm‚ earning her the title of the first computer programmer․ Babbage’s vision laid the groundwork for modern computing‚ emphasizing logical operations and memory storage․ His work symbolizes the transition from manual calculations to mechanized computation‚ making him a pioneer in computer science and engineering․

3․2․ Mechanical Computing Devices (1800s-1900s)

The 19th century saw the rise of mechanical computing devices‚ building on Charles Babbage’s ideas․ The Difference Engine‚ designed by Babbage‚ was constructed in 2002 based on his 1822 plans‚ though his lifetime saw only partial success․ Other inventors‚ like Georg and Edvard Scheutz‚ developed machines inspired by Babbage’s work‚ creating functional mechanical calculators․ These devices were primarily used for mathematical tables and scientific calculations‚ marking the transition from theoretical concepts to practical tools․ They laid the groundwork for Understanding mechanical computation‚ bridging the gap to the electronic era․

The Dawn of Electronic Computing

The transition from mechanical to electronic computing in the mid-20th century revolutionized technology‚ with ENIAC (1946) emerging as the first large-scale electronic computer‚ marking the digital age’s beginning․

4․1․ ENIAC (1946)

ENIAC‚ the Electronic Numerical Integrator and Computer‚ was the first large-scale electronic computer‚ developed in 1946 by John Mauchly and J․ Presper Eckert․ It used vacuum tubes to perform calculations‚ weighing over 27 tons and occupying 1‚800 square feet․ ENIAC was designed to compute artillery firing tables for the U․S․ Army during World War II․ It could perform 5‚000 operations per second‚ a significant leap from mechanical computers․ ENIAC’s programmability‚ though limited‚ laid the foundation for modern computing‚ marking the beginning of the digital era and influencing future computer designs․

4․2․ The Atanasoff-Berry Computer (ABC)

The Atanasoff-Berry Computer (ABC) was the first electronic digital computer‚ invented by John Vincent Atanasoff in the early 1940s․ It introduced binary arithmetic and a memory system‚ solving mathematical equations efficiently․ Unlike ENIAC‚ the ABC didn’t require reprogramming for different tasks‚ using patch cords for configuration․ Development began at Iowa State College‚ but it remained incomplete due to funding issues and Atanasoff’s WWII involvement․ Despite this‚ the ABC’s design influenced later computers‚ showcasing early innovations in digital computing and laying groundwork for modern machines․

4․3․ Colossus (1944)

Colossus‚ the world’s first programmable electronic computer‚ was developed in 1944 by Tommy Flowers and his team at Bletchley Park‚ UK․ Built to crack German codes during WWII‚ it used vacuum tubes and was capable of processing data at unprecedented speeds․ Colossus played a crucial role in deciphering enemy communications‚ significantly aiding Allied efforts․ Despite its historical significance‚ Colossus remained classified after the war‚ limiting its recognition until declassification in the 1970s․ Its design laid the groundwork for future computing innovations‚ marking it as a pivotal milestone in computer history․

Generations of Computers

Computers evolved through five distinct generations‚ transitioning from vacuum tubes to artificial intelligence․ Each generation introduced groundbreaking technologies‚ enhancing performance‚ reducing size‚ and revolutionizing computing capabilities․

5․1․ First Generation (1940s-1950s): Vacuum Tubes

The first generation of computers‚ spanning the 1940s to 1950s‚ relied on vacuum tubes for computation and storage․ These bulky devices were prone to overheating and required significant power‚ but they marked the beginning of electronic computing․ ENIAC (1946)‚ developed by John Mauchly and J․ Presper Eckert‚ was a landmark machine in this era‚ weighing over 27 tons and using thousands of vacuum tubes․ Despite their limitations‚ these computers laid the foundation for modern computing‚ enabling early applications like code-breaking and scientific calculations․ The transition to transistors in the next generation would address the inefficiencies of vacuum tubes․

5․2․ Second Generation (1950s-1960s): Transistors

The second generation of computers emerged in the 1950s with the introduction of transistors‚ replacing bulky vacuum tubes․ Transistors were smaller‚ faster‚ and more reliable‚ reducing power consumption and heat generation․ This era saw the development of high-level programming languages like COBOL and FORTRAN‚ making computers more accessible for business and scientific applications․ Machines like IBM 1401 and UNIVAC 1107 became popular‚ marking the beginning of commercial computing․ The transistor era laid the groundwork for miniaturization and efficiency‚ paving the way for integrated circuits in the next generation․

5․3․ Third Generation (1960s-1970s): Integrated Circuits

The third generation introduced integrated circuits‚ where multiple transistors were combined on a single silicon chip‚ enhancing performance and reducing size․ This led to faster processing‚ lower costs‚ and improved reliability․ Computers like the IBM System/360 and PDP-8 became iconic‚ with the development of operating systems and time-sharing capabilities․ Integrated circuits enabled the creation of smaller‚ more powerful machines‚ advancing both business and scientific computing․ This era also saw the rise of minicomputers and the foundation for modern computing architectures‚ setting the stage for the microprocessor revolution in the next generation․

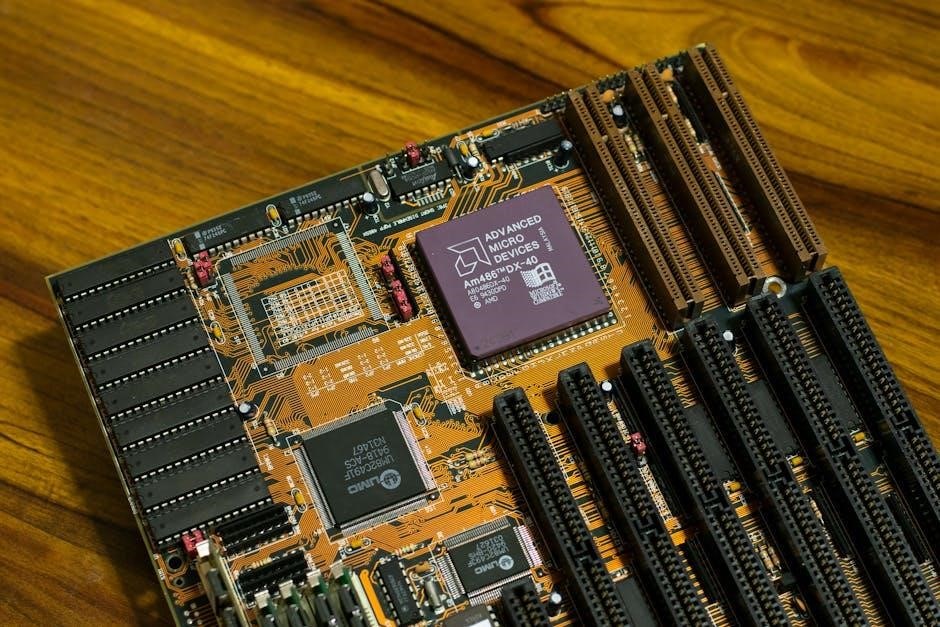

5․4․ Fourth Generation (1970s-1980s): Microprocessors

The fourth generation marked the advent of microprocessors‚ with Intel’s 4004 (1971) integrating the central processing unit (CPU) onto a single chip․ This revolutionized computing‚ enabling smaller‚ faster‚ and more affordable machines․ Personal computers emerged‚ with Apple and IBM leading the market․ Graphical user interfaces (GUIs) became mainstream‚ enhancing usability․ The microprocessor’s impact was profound‚ democratizing access to computing power and transforming industries․ This era also saw the rise of software development‚ with programming languages like BASIC and C gaining prominence‚ further accelerating technological advancements and paving the way for the digital age․

5․5․ Fifth Generation (1980s-Present): Artificial Intelligence

The fifth generation of computers‚ beginning in the 1980s‚ focuses on artificial intelligence (AI) and advanced parallel processing․ This era introduced neural networks‚ machine learning‚ and natural language processing‚ enabling machines to perform complex tasks autonomously․ AI-driven systems like IBM’s Watson and voice assistants exemplify this generation’s capabilities․ The integration of AI into healthcare‚ finance‚ and transportation has transformed industries‚ offering unprecedented efficiency and innovation․ As AI continues to evolve‚ it promises to solve intricate problems‚ making computing more intuitive and powerful than ever before․ This generation emphasizes not just computation‚ but intelligent‚ adaptive‚ and human-like problem-solving abilities․

Key Inventions and Innovations

The microchip‚ invented by Jack Kilby in 1957‚ revolutionized computing by integrating transistors into a single package‚ reducing size and cost while enhancing performance․

6․1․ The Development of the Microchip

The microchip‚ invented by Jack Kilby in 1957‚ marked a groundbreaking innovation in computing technology․ By integrating multiple transistors into a single package‚ it significantly reduced size and cost․ This advancement enabled the production of smaller‚ faster‚ and more affordable electronic devices‚ revolutionizing the computer industry․ The microchip’s development was pivotal in transitioning from bulky machines to compact‚ efficient systems‚ paving the way for modern computing advancements․

6․2․ Invention of the Stored-Program Concept

The stored-program concept‚ a revolutionary idea in computing‚ allowed computers to store both data and instructions in their memory․ This innovation enabled machines to execute programs dynamically‚ eliminating the need for physical rewiring․ Developed in the 1940s‚ it laid the foundation for modern computing by enabling programmability and flexibility․ Early computers like ENIAC required manual reconfiguration for different tasks‚ but the stored-program concept introduced the ability to run multiple programs sequentially․ This breakthrough significantly enhanced computational efficiency and paved the way for the development of general-purpose computers‚ making it a cornerstone of modern computer architecture and functionality․

6․3․ Breakthroughs in Memory and Storage

Advancements in memory and storage have been pivotal in computer evolution‚ enabling machines to process and retain vast amounts of data efficiently․ Early systems relied on punched cards and magnetic drums‚ but the invention of magnetic core memory in the 1950s marked a significant leap․ Later‚ the development of integrated circuits and the microchip in 1957 revolutionized storage capacity and access speeds․ Modern innovations like solid-state drives (SSDs) and cloud storage have further transformed data management‚ offering faster‚ more reliable‚ and scalable solutions․ These breakthroughs have been essential for the progression of computing power and the ability to handle complex tasks․

Notable Computer Scientists and Inventors

Pioneers like John Mauchly‚ J․ Presper Eckert‚ John Vincent Atanasoff‚ and Tommy Flowers made groundbreaking contributions to computer development‚ shaping modern computing through their innovative designs and inventions․

7․1․ John Mauchly and J․ Presper Eckert

John Mauchly and J․ Presper Eckert were pivotal figures in computer history‚ renowned for inventing ENIAC (1946)‚ the first large-scale electronic computer․ Their collaboration revolutionized computing‚ enabling rapid calculations for military and scientific applications․ Eckert’s technical expertise complemented Mauchly’s visionary ideas‚ leading to the development of the Stored-Program Concept‚ a cornerstone of modern computers․ Post-ENIAC‚ they pioneered BINAC‚ the first stored-program computer‚ and founded the Eckert-Mauchly Computer Corporation‚ which later influenced UNIVAC‚ the first commercially available computer․ Their contributions laid the foundation for the computer industry‚ transforming technology and society forever․

7․2․ John Vincent Atanasoff

John Vincent Atanasoff‚ an American physicist‚ is celebrated for inventing the Atanasoff-Berry Computer (ABC)‚ the first electronic digital computer․ His innovative use of binary arithmetic and memory systems in the 1930s laid the groundwork for modern computing․ The ABC’s design introduced novel concepts such as regenerative memory and a separate arithmetic unit‚ influencing future computer architectures․ Atanasoff’s work‚ though often overlooked‚ was integral to the development of ENIAC and subsequent computers․ His contributions remained significant‚ shaping the trajectory of computer technology and earning him recognition as a pioneer in the field of computing․

7․3․ Tommy Flowers and Colossus

Tommy Flowers‚ a British engineer‚ played a pivotal role in developing Colossus‚ the world’s first programmable electronic computer‚ during World War II․ Designed to crack German codes‚ Colossus was instrumental at Bletchley Park‚ helping Allied forces decipher critical communications; Flowers’ innovative approach to vacuum tube technology and his leadership in the project ensured its success․ Colossus’s ability to process complex algorithms at high speeds revolutionized code-breaking‚ significantly shortening the war․ Despite its historical importance‚ Colossus remained classified post-war‚ delaying its recognition as a foundational milestone in computer history․ Flowers’ contributions to computing and cryptography remain unparalleled‚ shaping modern computer design․

The Impact of Computing on Society

Computing transformed society by revolutionizing communication‚ work‚ and entertainment․ It enabled global connectivity‚ personalized computing‚ and technological advancements‚ reshaping daily life and fostering the digital age․

8․1․ Early Applications in Code-Breaking

Code-breaking was one of the earliest and most critical applications of computing․ During World War II‚ computers like Colossus and ENIAC were used to crack enemy codes‚ significantly aiding the war effort․ Colossus‚ developed by Tommy Flowers‚ was the first programmable electronic computer and played a key role in breaking German codes‚ giving the Allies a strategic advantage․ These early machines demonstrated the power of computing in solving complex problems‚ laying the groundwork for future advancements in cryptography and intelligence gathering․ Their success underscored the importance of computing in national security and paved the way for modern computing applications․

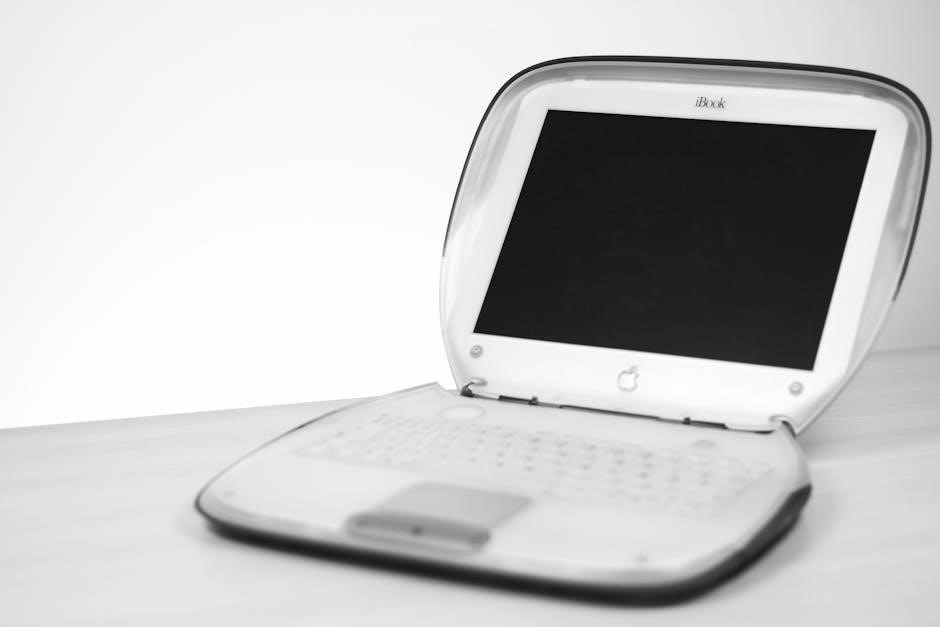

8․2․ Computers in the Home

The advent of home computers in the 1980s marked a significant shift in technology adoption․ Machines like the Commodore 64 and Apple Macintosh brought computing into personal spaces‚ enabling individuals to perform tasks like word processing‚ gaming‚ and financial management․ This democratization of technology sparked a cultural change‚ making computers accessible beyond institutional settings․ The IBM PC further popularized home computing‚ setting standards for compatibility and usability․ As affordability and user-friendly interfaces improved‚ computers became integral to daily life‚ revolutionizing education‚ entertainment‚ and communication․ This era laid the foundation for the modern digital age‚ where personal computing is ubiquitous․

8;3․ The Rise of the Internet

The rise of the Internet transformed global communication and information sharing․ Originating from ARPANET in 1969‚ it expanded in the 1980s with TCP/IP protocols‚ enabling worldwide connectivity․ The 1990s saw its commercialization‚ introducing the World Wide Web by Tim Berners-Lee․ This revolutionized access to information‚ e-commerce‚ and social interactions․ The Internet’s impact on society has been profound‚ reshaping education‚ entertainment‚ and business․ Its evolution continues with advancements in speed‚ security‚ and accessibility‚ making it an indispensable tool in modern life․

Modern Computing and Future Trends

Modern computing focuses on advancements like supercomputers‚ cloud computing‚ and quantum computing․ Future trends include AI integration‚ enhancing processing power‚ and transforming industries globally․

9․1․ Supercomputers and High-Performance Computing

Supercomputers represent the pinnacle of computational power‚ enabling complex tasks like weather forecasting‚ scientific simulations‚ and data analysis․ Originating in the 1940s with machines like ENIAC and Colossus‚ supercomputers evolved rapidly‚ with the Cray-1 (1976) marking a significant milestone․ Today‚ they use parallel processing and advanced architectures to achieve unprecedented speeds‚ often measured in petaflops․ Modern supercomputers like Summit and Fugaku drive breakthroughs in fields such as medicine‚ climate modeling‚ and artificial intelligence․ As high-performance computing advances‚ it integrates with emerging technologies like quantum computing‚ promising even greater capabilities for solving global challenges and pushing the boundaries of human knowledge․

9․2․ Cloud Computing and Distributed Systems

Cloud computing emerged in the late 20th century‚ revolutionizing data storage and processing by enabling on-demand access to shared computing resources․ Early innovations‚ such as virtualization and distributed systems‚ laid the groundwork․ The 2000s saw major advancements with platforms like Amazon Web Services and Microsoft Azure‚ offering scalable infrastructure․ Cloud computing allows businesses to reduce costs and enhance flexibility‚ while distributed systems enable efficient data management across networks․ These technologies underpin modern applications‚ from social media to big data analytics‚ and continue to evolve with advancements in edge computing and hybrid cloud solutions‚ driving digital transformation across industries globally․

9․3․ Quantum Computing

Quantum computing represents a revolutionary leap in processing power‚ leveraging quantum mechanics to solve complex problems․ Unlike classical computers‚ quantum computers use qubits‚ enabling parallel processing through superposition and entanglement․ Emerging in the 1980s‚ with breakthroughs like Shor’s algorithm‚ quantum computing gained momentum․ Companies like IBM and Google pioneered quantum processors‚ achieving milestones like quantum supremacy in 2019․ This technology promises advancements in cryptography‚ optimization‚ and AI‚ though challenges like error correction and scalability persist․ The future of computing likely lies in hybrid models combining classical and quantum systems‚ driving innovation across industries and transforming computational capabilities beyond current limits․